半监督与弱监督图像分割

半监督与弱监督图像分割

# 半监督与弱监督语义分割

# 1、任务简介

半监督语义分割是指:数据集中有少量的 pixel-level 标注以及大量的 image-level 标注

弱监督语义分割是指:数据集中全是 image-level 的标注

# 2、实验设置简介

# 半监督语义分割的实验设置

半监督的主要数据集是PASCAL VOC 2012 数据集及其扩展数据集 SBD

| 名称 | 训练集 | 验证集 | 测试 | 类别数 |

|---|---|---|---|---|

| PASCAL VOC 2012 | 1464 | 1449 | 1456 | 21 |

| Semantic-Boundaries-Dataset (SBD) | 8498 | 2857 | 0 | 21 |

| PASCAL VOC 2012 Aug | 10582 | 1449 | 1456 | 21 |

感谢 博客 (opens new window) 的介绍,下面是 PASCAL VOC 2012 Aug 中 10582张图像的由来:

- sbd_train(8498)=和voc_train重复的图片(1133)+和voc_val重复的图片(545)+sbd_train真正补充的图片(6820)

- sbd_val(2857)=和voc_train重复的图片(1)+和voc_val重复的图片(558)+sbd_val真正补充的图片(2298)

- voc_train(1464) + voc_val(1449)+sbd_train真正补充的图片(6820) + sbd_val真正补充的图片(2298)=12031

- 用原来的 voc_val(1449) 作为验证集,剩下的 12031 - voc_val(1449) =10582 都可以用作训练,就是trainaug(10582)

常常会看到使用以下的实验设置代称,现在做一些解释

- 1.4k strong :即为原版PASCAL VOC 2012 的 1464 张训练集,带有 pixel-level 的强监督,一般作为Baseline

- 10.6k weak:即为PASCAL VOC 2012 + SBD 的训练集之和,带有image-level 的弱监督。

- 10.6k strong:即为PASCAL VOC 2012 + SBD 的训练集之和,带有pixel-level 的强监督,一般作为性能上限

半监督语义分割常见的实验设置是

- 1/8:PASCAL VOC 2012 12.5% labeled (opens new window),即是使用1323张图像的标注

- 1/20:PASCAL VOC 2012 5% labeled (opens new window),即是使用529张图像的标注

- 1/50:PASCAL VOC 2012 2% labeled (opens new window),即是使用212张图像的标注

- 1/106:PASCAL VOC 2012 1% labeled (opens new window),即是使用100张图像的标注

- 1.4k strong + 9.2k weak:使用1.4k 原始VOC数据集中的训练图像标注,剩下的9.2k 图像作为未标注图像

半监督语义分割有两种常见的实验设置即为在PASCAL VOC 2012 Aug数据集上,使用1.4k strong + 9.2k weak,探索各类方法在这种数据分布下的实验性能。还有一种实验设置是,在PASCAL VOC 2012 Aug数据集上,使用1/50、1/20、1/8 的数据作为强监督,剩下的数据作为弱监督探索各类方法的实验性能。

模型情况,一般来讲都是使用 Deeplab 系列的分割模型,主要有以下几种搭配

- DeepLab v1-vgg16:VGG16 Backbone 的 DeepLabv1

- DeepLab v2-resnet101:ResNet101 Backbone 的 DeepLabv2

因为PASCAL VOC 2012 的验证集是给定了的,而测试集的精度需要去官网上 submit,所以一般论文都会报告 PASCAL VOC 2012 的 val set 上的性能,一部分论文会附上 test set 上的性能。

- 参考资料:https://paperswithcode.com/task/semi-supervised-semantic-segmentation

# 弱监督语义分割的实验设置

常常会看到使用以下的实验设置代称,现在做一些解释

- 1.4k strong :即为原版PASCAL VOC 2012 的 1464 张训练集,带有 pixel-level 的强监督,一般作为Baseline

- 10.6k weak:即为PASCAL VOC 2012 + SBD 的训练集之和,带有image-level 的弱监督。

- 10.6k strong:即为PASCAL VOC 2012 + SBD 的训练集之和,带有pixel-level 的强监督,一般作为性能上限

弱监督语义分割常见的实验设置是

1.4k strong + 9.2k weak:使用1.4k 原始VOC数据集中的训练图像作为强监督,剩下的9.2k 图像作为弱监督

参考资料:https://paperswithcode.com/task/weakly-supervised-semantic-segmentation

# 3、半监督语义分割的经典对比方法简介

# 半监督语义分割的经典对比方法

# 弱监督语义分割的经典对比方法

# 4、半监督语义分割论文

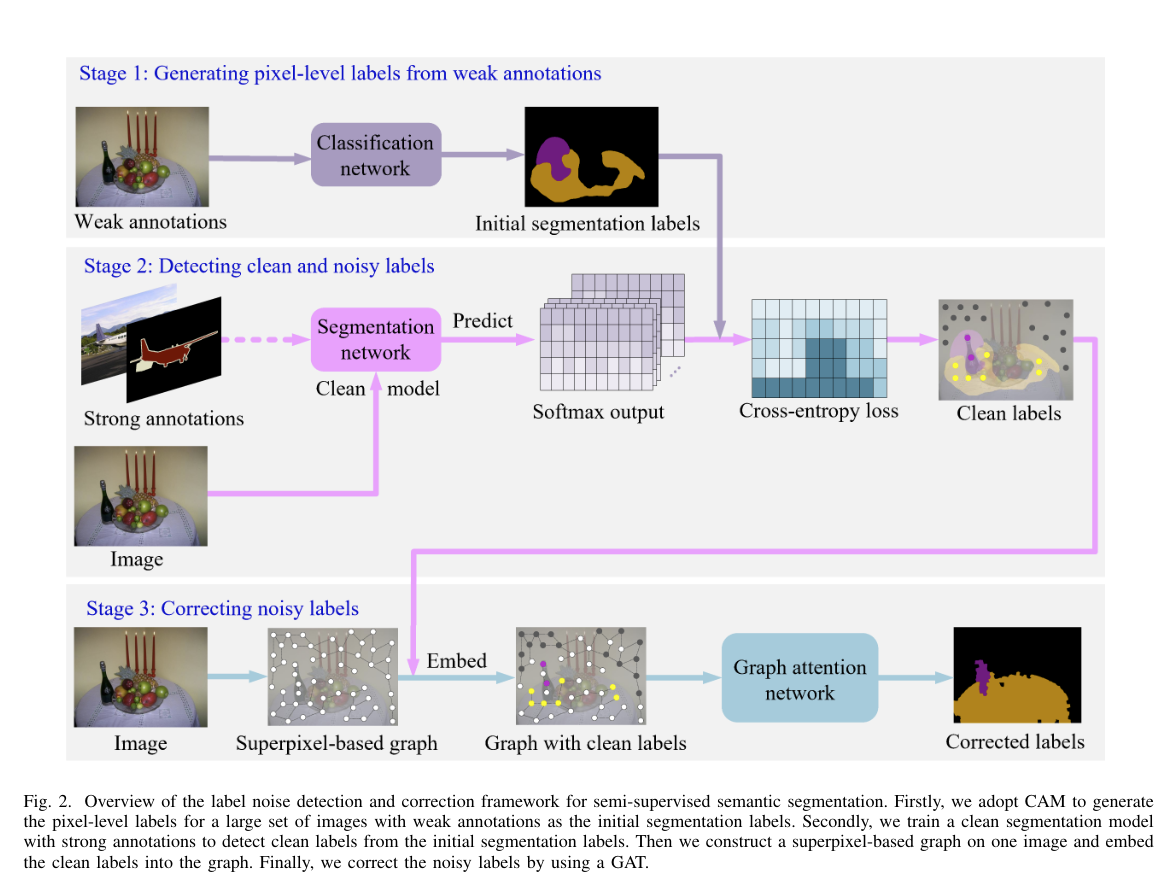

Learning from Pixel-Level Label Noise: A New Perspective for Semi-Supervised Semantic Segmentation.

作者:Rumeng Yi, Yaping Huang, Qingji Guan, Mengyang Pu, and Runsheng Zhang. (北交)

Code:无

从噪声的视角去看待半监督的语义分割任务,引入基于超像素的图建模图中像素的空间相邻性和语义相似性,利用 GAT 来修正带噪标签,也用到了CRF做细化,将修正后的标签一起再来训分割模型。

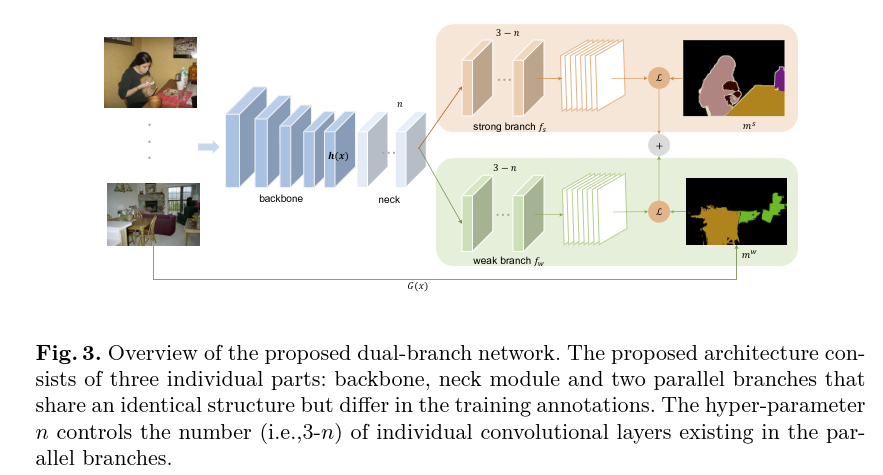

Semi-supervised Semantic Segmentation via Strong-weak Dual-branch Network.

作者:Wenfeng Luo, Meng Yang.(中山大学)

Code:无

论文主要的贡献是着眼于改进半监督语义分割的 Image-level 样本的利用方式,作者使用共享 Backbone 和 Neck的双分支网络,分为 Strong 分支以及 Weak 分支,将强监督样本送入 Strong 分支,将弱监督样本送入 Weak 分支,可以较好的消除监督不一致的现象,有助于缓解强弱样本不平衡问题。训练完成后,弱分支就不再需要了,其在训练过程中起到正则化的作用,加强了 Backbone 以及 Neck 网络的泛化能力。

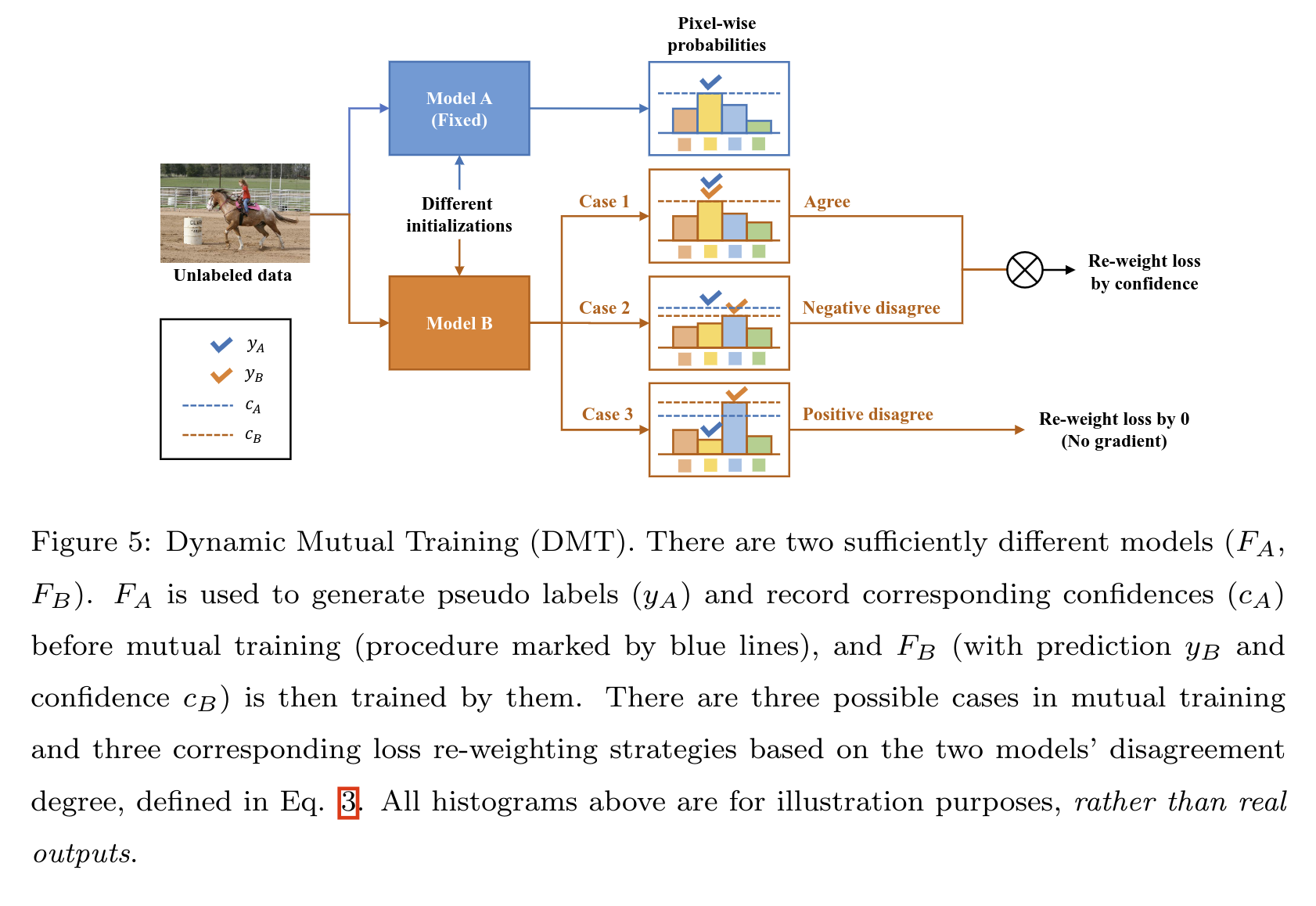

DMT: Dynamic Mutual Training for Semi-Supervised Learning.

作者:Zhengyang Feng, Qianyu Zhou, Qiqi Gu, Xin Tan, Guangliang Cheng, Xuequan Lu, Jianping Shi, Lizhuang Ma.(商汤以及上交DMCV实验室)

发表:arXiv (opens new window), 准备投PR

DMT 提出了一种方法,使用两个模型对伪标签生成动态权重。对样本划分了三种情况给予不同的权重,以迭代式的方法将伪标签 Refine 到越来越好,并且生成像素级的权重促进模型学习。

# 5、弱监督语义分割论文

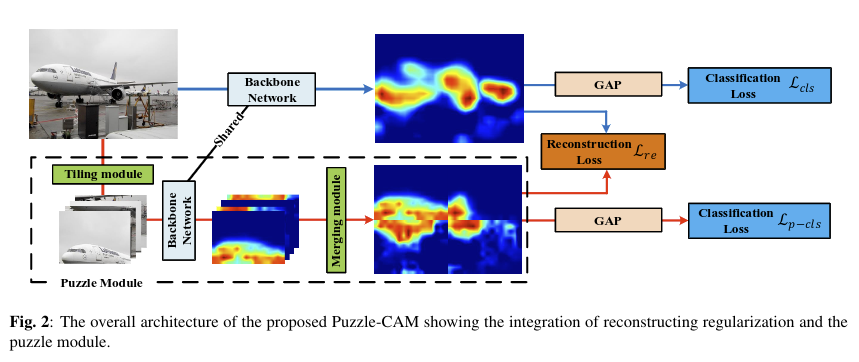

Puzzle-CAM: Improved localization via matching partial and full features.

作者:Sanghyun Jo, In-Jae Yu. (KAIST)

发表:ICIP 2021,arXiv (opens new window)

性能:

- DeepLabv3plus + ResNest-269

- val:66.9

- test:67.7

- DeepLabv3plus + ResNest-269

- val:71.9

- test:72.2

- DeepLabv3plus + ResNest-269

论文主要的贡献是着眼于改进半监督语义分割的 Image-level 样本的利用方式,作者使用共享 Backbone 和 Neck的双分支网络,分为 Strong 分支以及 Weak 分支,将强监督样本送入 Strong 分支,将弱监督样本送入 Weak 分支,可以较好的消除监督不一致的现象,有助于缓解强弱样本不平衡问题。训练完成后,弱分支就不再需要了,其在训练过程中起到正则化的作用,加强了 Backbone 以及 Neck 网络的泛化能力。

Self-supervised Equivariant Attention Mechanism for Weakly Supervised Semantic Segmentaion.(SEAM)

- 作者:

- 发表:arXiv (opens new window)

- Code:GitHub (opens new window)

- 性能:

- ResNet38d + AffinityNet + DeepLabv1

- val:64.5

- test:65.7

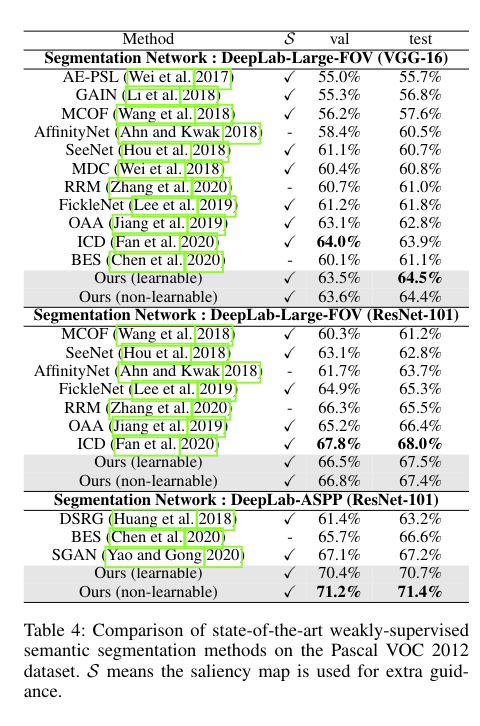

Discriminative Region Suppression for Weakly-Supervised Semantic Segmentation.(DRS)(参考文献较为完善)

- 发表:AAAI2021,arXiv (opens new window)

- Code:GitHub (opens new window)

- 性能:

- VGG-16 + AffinityNet + DeepLabv1

- val:63.6

- test:64.5

- ResNet-101 + AffinityNet + DeepLabv1

- val:66.8

- test:67.5

- ResNet-101 + AffinityNet + DeepLabv2

- val:71.2

- test:71.4

- VGG-16 + AffinityNet + DeepLabv1

Causal Intervention for Weakly Supervised Semantic Segmentation.(CONTA)

- 发表:NeuIPS 2020 Oral,arXiv (opens new window)

- Code:GitHub (opens new window)

- 性能:

- IRNet+CONTA

- val:65.3

- test:64.8

- SEAM+CONTA

- val:66.1

- test:66.7

- IRNet+CONTA

Weakly Supervised Learning of Instance Segmentation with Inter-pixel Relations(IRNet)

- 发表:CVPR 2019,CVPR 2019 (opens new window)

- Code: GitHub (opens new window)

- 性能:

- ResNet50 + DeepLabv2

- val:63.5

- test:64.8

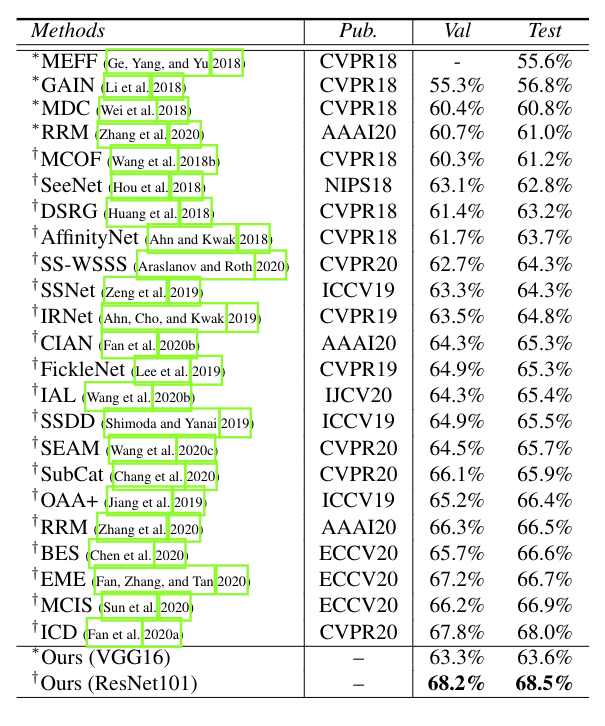

Group-Wise Semantic Mining for Weakly Supervised Semantic Segmentation.(参考文献较为完善)

- 发表:AAAI2021,arXiv (opens new window)

- Code:GitHub (opens new window)

- 性能:

- VGG-16 + DeepLabv2

- val:63.3

- test:63.6

- ResNet-101 + DeepLabv2

- val:68.2

- test:68.5

- VGG-16 + DeepLabv2

Weakly-Supervised Image Semantic Segmentation Using Graph Convolutional Networks.

- 发表:ICME 2021,arXiv (opens new window)

- Code:GitHub (opens new window)

- 性能:

- ResNet-101 + DeepLabv2

- val:66.7

- test:68.8

- ResNet-101 + DeepLabv2 + Backbone COCO Pretrain

- val:68.7

- test:69.3

- ResNet-101 + DeepLabv2

Leveraging Instance-, Image- and Dataset-Level Information for Weakly Supervised Instance Segmentation.(LIID)(参考文献较为全面)

- 发表:TPAMI 2020,IEEE (opens new window)

- Code:GitHub (opens new window)

性能: - ResNet-101 + DeepLabv2 - val:66.5 - test:67.5 - ResNet-101 + DeepLabv2 + Backbone 24K ImageNet Pretrain - val:67.8 - test:68.3 - Res2Net-101 + DeepLabv2 - val:69.4 - test:70.4

Learning Integral Objects With Intra-Class Discriminator for Weakly-Supervised Semantic Segmentation.

发表:CVPR 2020,CVPR (opens new window)

性能:

VGG-16 + DeepLabv1

- val:61.2

- test:60.9

ResNet101 + DeepLabv1

val:64.1

test:64.3

| 实验设置 | val set | test set | Paper | Code | ||

|---|---|---|---|---|---|---|

| ICD | CVPR 2020 | ResNet101 + DeepLabv1 | 64.1 | 64.3 | CVPR (opens new window) | link (opens new window) |

| LIID | TPAMI 2020 | Res2Net-101 + DeepLabv2 | 69.4 | 70.4 | IEEE (opens new window) | GitHub (opens new window) |

| WSGCN | ICME 2021 | ResNet-101 + DeepLabv2 + Backbone COCO Pretrain | 68.7 | 69.3 | arXiv (opens new window) | GitHub (opens new window) |

| Group-WSSS | AAAI 2021 | ResNet-101 + DeepLabv2 | 68.2 | 68.5 | arXiv (opens new window) | GitHub (opens new window) |

| IRNet | CVPR 2019 | ResNet50 + DeepLabv2 | 63.5 | 64.8 | CVPR 2019 (opens new window) | GitHub (opens new window) |

| CONTA | NeuIPS 2020 Oral | SEAM+CONTA | 66.1 | 66.7 | arXiv (opens new window) | GitHub (opens new window) |

| DRS | AAAI 2021 | ResNet-101 + DeepLabv2 | 71.2 | 71.4 | arXiv (opens new window) | GitHub (opens new window) |

| SEAM | CVPR 2020 Oral | ResNet38d + AffinityNet + DeepLabv1 | 64.5 | 65.7 | arXiv (opens new window) | GitHub (opens new window) |

| Puzzle-CAM | ICIP 2021 | ResNest-269 + DeepLabv3plus | 71.9 | 72.2 | arXiv (opens new window) | GitHub (opens new window) |

| AffinityNet | CVPR 2018 | ResNet38d + ? | 61.7 | 63.7 | arXiv (opens new window) | GitHub (opens new window) |

DeepLabv1

| Backbone | val set | test set | Paper | Code | ||

|---|---|---|---|---|---|---|

| IRNet | CVPR 2019 | ResNet50 + DeepLabv2 | 63.5 | 64.8 | CVPR 2019 (opens new window) | GitHub (opens new window) |

| CVPR 2020 | ResNet101 + DeepLabv1 | 64.1 | 64.3 | CVPR (opens new window) | link (opens new window) | |

| LIID | TPAMI 2020 | Res2Net-101 + DeepLabv2 | 69.4 | 70.4 | IEEE (opens new window) | GitHub (opens new window) |

| WSGCN | ICME 2021 | ResNet-101 + DeepLabv2 + Backbone COCO Pretrain | 68.7 | 69.3 | arXiv (opens new window) | GitHub (opens new window) |

| Group-WSSS | AAAI 2021 | ResNet-101 + DeepLabv2 | 68.2 | 68.5 | arXiv (opens new window) | GitHub (opens new window) |

| CONTA | NeuIPS 2020 Oral | SEAM+CONTA | 66.1 | 66.7 | arXiv (opens new window) | [GitHub]( |

DeepLabv2

| Backbone | val set | test set | Paper | Code | ||

|---|---|---|---|---|---|---|

| IRNet | CVPR 2019 | ResNet50 + DeepLabv2 | 63.5 | 64.8 | CVPR 2019 (opens new window) | GitHub (opens new window) |

| CVPR 2020 | ResNet101 + DeepLabv1 | 64.1 | 64.3 | CVPR (opens new window) | link (opens new window) | |

| LIID | TPAMI 2020 | Res2Net-101 + DeepLabv2 | 69.4 | 70.4 | IEEE (opens new window) | GitHub (opens new window) |

| WSGCN | ICME 2021 | ResNet-101 + DeepLabv2 + Backbone COCO Pretrain | 68.7 | 69.3 | arXiv (opens new window) | GitHub (opens new window) |

| Group-WSSS | AAAI 2021 | ResNet-101 + DeepLabv2 | 68.2 | 68.5 | arXiv (opens new window) | GitHub (opens new window) |

| CONTA | NeuIPS 2020 Oral | SEAM+CONTA | 66.1 | 66.7 | arXiv (opens new window) |

上图是Group-WSSS,下一张图是DRS

- 02

- README 美化05-20

- 03

- 常见 Tricks 代码片段05-12