(SimCLRv2) Big Self-Supervised Models are Strong Semi-Supervised Learners

(SimCLRv2) Big Self-Supervised Models are Strong Semi-Supervised Learners

# (SimCLRv2)Big Self-Supervised Models are Strong Semi-Supervised Learners

# 作者:Google Hinton组

# 摘要

# 阅读

# 论文的目的及结论

# 论文的实验

# 论文的方法

# 论文的背景

# 总结

# 论文的贡献

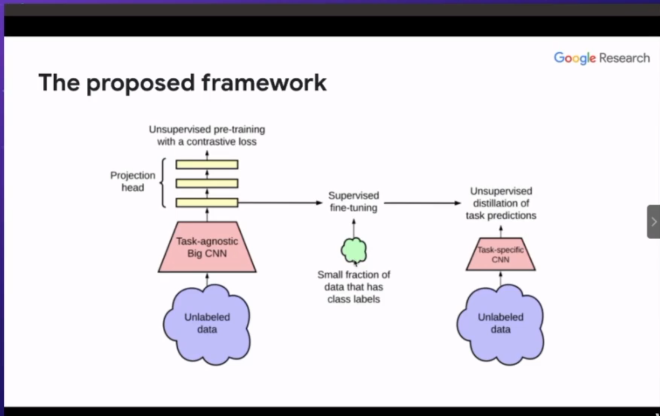

Big CNN 用无监督学习来pretraining,然后去fine-tuning

再做一个无监督的蒸馏,train一个student model

无监督的蒸馏:用finetune的model来计算一个soft label,然后去train这个student model

# 论文的不足

# 论文如何讲故事

两个利用无标记数据的范式

- Task-agnostic use of unlabeled data

- Unsupervised pre-training + supervised fine-tuning

- Task-specific use of unlabeled data

- Self-training,pseudo-labeling

- Label consistency regularization

- Other label propogation

# 参考资料

上次更新: 2021/11/03, 23:35:28

- 02

- README 美化05-20

- 03

- 常见 Tricks 代码片段05-12